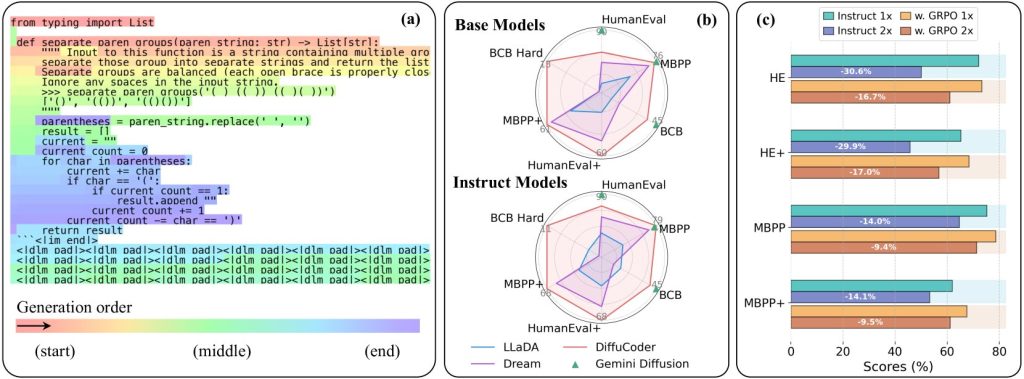

Apple quietly dropped a brand new AI mannequin on Hugging Face with an attention-grabbing twist. As an alternative of writing code like conventional LLMs generate textual content (left to proper, high to backside), it will probably additionally write out of order, and enhance a number of chunks without delay.

The result’s sooner code technology, at a efficiency that rivals high open-source coding fashions. Right here’s the way it works.

The nerdy bits

Listed here are some (overly simplified, within the title of effectivity) ideas which are vital to grasp earlier than we will transfer on.

Autoregression

Historically, most LLMs have been autoregressive. Because of this once you ask them one thing, they course of your total query, predict the primary token of the reply, reprocess your entire query with the primary token, predict the second token, and so forth. This makes them generate textual content like most of us learn: left to proper, high to backside.

Temperature

LLMs have a setting known as temperature that controls how random the output could be. When predicting the following token, the mannequin assigns possibilities to all attainable choices. A decrease temperature makes it extra doubtless to decide on probably the most possible token, whereas a better temperature offers it extra freedom to select much less doubtless ones.

Diffusion

A substitute for autoregressive fashions is diffusion fashions, which have been extra usually utilized by picture fashions like Secure Diffusion. In a nutshell, the mannequin begins with a fuzzy, noisy picture, and it iteratively removes the noise whereas preserving the consumer request in thoughts, steering it in direction of one thing that appears increasingly like what the consumer requested.

Nonetheless with us? Nice!

Currently, some massive language fashions have seemed to the diffusion structure to generate textual content, and the outcomes have been fairly promising. If you wish to dive deeper into the way it works, right here’s a fantastic explainer:

Why am I telling you all this? As a result of now you’ll be able to see why diffusion-based textual content fashions could be sooner than autoregressive ones, since they will mainly (once more, mainly) iteratively refine your entire textual content in parallel.

This conduct is particularly helpful for programming, the place international construction issues greater than linear token prediction.

Phew! We made it. So Apple launched a mannequin?

Sure. They launched an open-source mannequin known as DiffuCode-7B-cpGRPO, that builds on high of a paper known as DiffuCoder: Understanding and Bettering Masked Diffusion Fashions for Code Technology, launched simply final month.

The paper describes a mannequin that takes a diffusion-first method to code technology, however with a twist:

“When the sampling temperature is elevated from the default 0.2 to 1.2, DiffuCoder turns into extra versatile in its token technology order, liberating itself from strict left-to-right constraints”

Because of this by adjusting the temperature, it will probably additionally behave both extra (or much less) like an autoregressive mannequin. In essence, Larger temperatures give it extra flexibility to generate tokens out of order, whereas decrease temperatures maintain it nearer to a strict left-to-right decoding.

And with an additional coaching step known as coupled-GRPO, it realized to generate higher-quality code with fewer passes. The end result? Code that’s sooner to generate, globally coherent, and aggressive with among the greatest open-source programming fashions on the market.

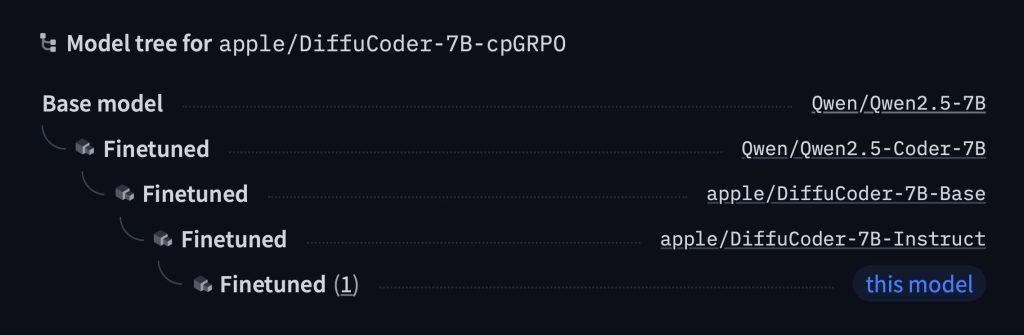

Constructed on high of an open-source LLM by Alibaba

Much more curiously, Apple’s mannequin is constructed on high of Qwen2.5‑7B, an open-source basis mannequin from Alibaba. Alibaba first fine-tuned that mannequin for higher code technology (as Qwen2.5‑Coder‑7B), then Apple took it and made its personal changes.

They turned it into a brand new mannequin with a diffusion-based decoder, as described within the DiffuCoder paper, after which adjusted it once more to higher observe directions. As soon as that was executed, they skilled yet one more model of it utilizing greater than 20,000 fastidiously picked coding examples.

And all this work paid off. DiffuCoder-7B-cpGRPO obtained a 4.4% enhance on a well-liked coding benchmark, and it maintained its decrease dependency on producing code strictly from left to proper.

After all, there’s loads of room for enchancment. Though DiffuCoder did higher than many diffusion-based coding fashions (and that was earlier than the 4.4% bump from DiffuCoder-7B-cpGRPO), it nonetheless doesn’t fairly attain the extent of GPT-4 or Gemini Diffusion.

And whereas some have identified that 7 billion parameters may be limiting, or that its diffusion-based technology nonetheless resembles a sequential course of, the larger level is that this: little by little, Apple has been laying the groundwork for its generative AI efforts with some fairly attention-grabbing and novel concepts.

Whether or not (or if? When?) that can truly translate into actual options and merchandise for customers and builders is one other story.

AirPods offers on Amazon

FTC: We use earnings incomes auto affiliate hyperlinks. Extra.